Computer Science

MIT 6.00.2x Review

Review of “Introduction to Computational Thinking and Data Science”

After finishing MIT 6.00.1x on EdX (click here for my review of that class) I jumped straight to MIT 6.00.2x: Introduction to Computational Thinking and Data Science. Overall, I’m happy I took the class and learned a lot from it, but there are several things I think it could improve on. Please note that this course is fully accessible: you can get your codes for all the problem sets and exams graded for free; you only have to pay if you want to obtain a verified certificate after passing the course — $49, less if you are granted EdX’s financial assistance.

All with my previous review, below are my 3 observations after taking the class:

1. If you can survive the first week, you’re golden

I was taken for a big shock when during the first week of the class — and the only one that really dealt with algorithms in the class — the algorithms kept getting harder and harder!

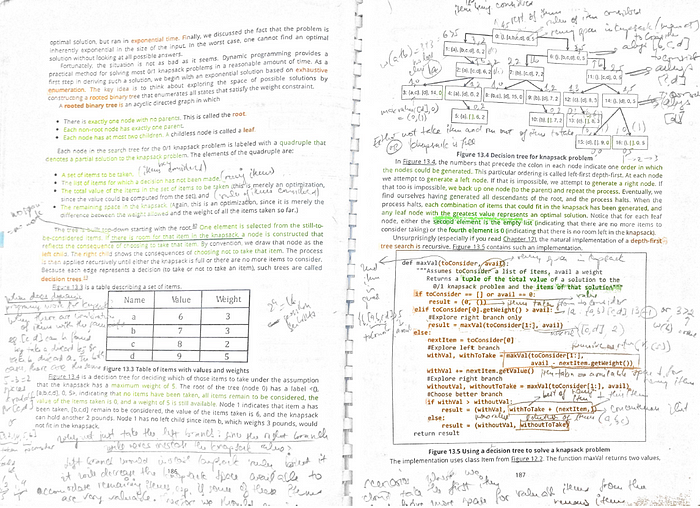

First, greedy algorithm seemed manageable, but when it came to using binary tree to solve the knapsack problem — and incorporating dynamic programming on top of it to save on the time complexity — I had to bust out my pencil and reams of paper trying to work out the logic of the algorithms.

And oh man, depth-first search really threw me for a loop! (pun intended). Just too many variables to keep track of during the recursion, and the professor made me feel really stupid when he said depth-first search is really straightforward and is easier than breadth-first search, which I found just the opposite. Sure, it’s easy when you’re skimming the code and sort of understand how it works on a high level, but tracing the stack of the algorithm really made me appreciate the little details that make the whole thing work, and marvel at the genius who invented this algorithm.

As hard as the first week was, as long as you are through with it, the course will become much easier. Random walks and Monte Carlo simulations were fun to code because the results can be visualized, and as the course came to an end, we had a lesson on machine learning, which brings me to my next point.

2. Machine learning should play a much more prominent role in the class

I understand that this is not a machine learning course, but rather a general course on programming and data science. However, given how prevalent machine learning is in the field at the moment, I think it deserves more attention than just one lesson — not even a whole week — at the end of the class.

Furthermore, that lesson did not even cover all the machine learning topics in the textbook, topics such as logistic regression as a classification model, evaluation metrics for classification model (accuracy, sensitivity, specificity, and all that), or the ROC curve. I thought those concepts were really interesting , so I wish the professor could have covered them more in the lectures instead of referring us to the book for those, as he did several times during the lectures.

Also, there were no coding exercise, problem set, or even problems in the final exam that dealt with machine learning, hence no way for students to practice and cement their knowledge in this area.

I think the biggest improvement that the course staff could make is to put a bigger focus on the machine learning (with concrete exercises) so that this course could truly stand out among the other data science online courses using Python. As it stands, there is still too little practical data science to speak of.

3. Additional suggestions

Below are some of my more minor suggestions that could make this course even better:

a. Make the source code PEP 8-compliant: no more def KNearestClassify please

b. More explanation on implementation choices rather than making the choices beforehand and just letting the students implement them: although there were many OOP-style codes in this class — too much it felt like — I wish there’d be more explanation on why an OOP style was used, rather than just merely showing the classes during lectures, and letting the students fill in the implementation for each class in the problem sets.

Granted, the professor did explain why using class was useful in certain cases, and the automatic grader might limit the flexibility of the implementation, but a good explanation might just be the key for students to understand the benefit of a particular strategy and be able to use it in the future.

c. Fix small errors in the lectures and in the textbook: the first time the professor made an error or had a typo in the code was cute, but when it happened several times, some even in the same 5-minute lecture, it got a little distracting. Similarly, there were some small mistakes in the book that could be fixed. As with the lectures, the book might warrant fuller explanation on implementation strategies for some problems.

Although these suggestions might seem like complaints, I’m overall very happy with the course, not least because I learned a lot from it despite the occasional challenges. Thank you MIT and the EdX staff for bringing quality education to all corners of the globe so that an average Joe like me could enrich my life from it.